Search This Blog

notes, ramblings, contemplations, transmutations, and otherwise ... on management and directory miscellanea.

Posts

Showing posts from 2011

listing the group membership of a computer in opsmgr [part 3]

- Get link

- X

- Other Apps

sccm: computers with names greater than 15 characters

- Get link

- X

- Other Apps

enable verbose logging on a sccm server

- Get link

- X

- Other Apps

sccm: integrating dell warranty data into configmgr

- Get link

- X

- Other Apps

ds: logon request fails when groups > 1024

- Get link

- X

- Other Apps

getting the first and last day of the month in sql

- Get link

- X

- Other Apps

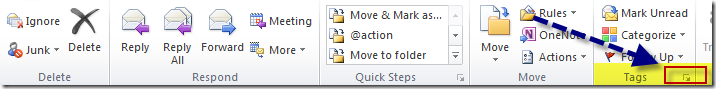

viewing internet headers of emails in outlook 2010

- Get link

- X

- Other Apps

opalis: operator console installation files

- Get link

- X

- Other Apps

open a command prompt to the directory in explorer

- Get link

- X

- Other Apps

atlanta smug (atlsmug) coming up 4/22/11

- Get link

- X

- Other Apps

sccm: client stuck downloading package with bit .tmp files in cache directory

- Get link

- X

- Other Apps

misc: offering remote assistance in windows 7

- Get link

- X

- Other Apps

how to use dropbox to synchronize windows 7 sticky notes

- Get link

- X

- Other Apps

powershell: naming functions and cmdlets

- Get link

- X

- Other Apps

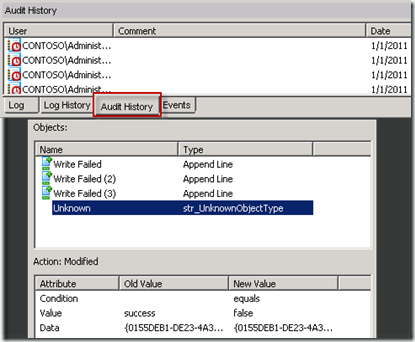

opalis: guidance on troubleshooting failed workflows

- Get link

- X

- Other Apps