opalis: working around limitations with workflow objects and link operators

UPDATE: pete zerger was kind enough to point out that sometimes i don't make sense, and i missed a very obvious point in the documentation. since the post itself is still useful, i didn't just scrap it. :) instead, i added an addendum.

if you've been working with opalis long enough, you might will find that there are moments when hacks are required to get you from one point to the next point. i've been experimenting a lot with nested workflows. it's like evolving from inline scripting to scripting with functions and/or subs.

i discovered that when using trigger policy to run a nested workflow, a bizarre thing happens. even if the nested workflow executes with an error, the status returned by the calling trigger policy object is "success". it didn't make sense at first until i realized that by all accounts, the trigger policy did execute successfully.

well, there's a problem with this. if it comes back as success, even though something failed, the policy will continue on down the path unless you tell it otherwise. enough of that. let's talk specifics about my scenario.

the workflow i created was designed to do one thing: usher alerts from opsmgr into tickets in remedy. since remedy is divided into many different operating queues, i had to consider how to create tickets into the correct queues. i decided to try it based on computer group membership.

in order to get the group membership, i had to query the opsmgr database. i decided to push that into a nested workflow so that it could be reused in other workflows at some later point. the information retrieved from the nested workflow would be the basis of information fed to a text file. the master workflow could reference the text file to search through for cross checking names.

now what would happen if the database failed to query, and the associated text file never filled with any data? if you're cross checking the alerts against an empty text file, chances are you will never have a match and as such no tickets generated.

but if opalis is returning a success on the nested workflow, how do you know the query is failing? that seems simple. if the published data returned from the nested workflow is empty, then obviously the query failed. too bad the link operators don't have any filters for stuff like "is empty" or "is not empty".

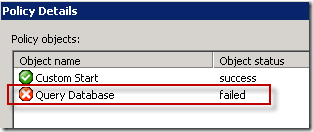

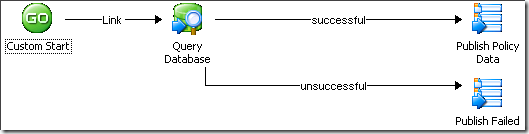

all isn't lost though. to get the effect that we want, we simply have to know what to look for. going to the nested workflow, we can use the query database object status as our criteria to branch appropriately. if successful, the publish policy data object writes the expected server list. if it runs into a warning or failure, we publish static text to a different publish policy data object in the form of "FAILED".

back in the master workflow, we can now use the link operator to cull out anything that tries to come through with "FAILED". if it matches the include filter, the policy processing stops.

addendum:

keep in mind that link operators do not have an "AND" operation. instead the filters are evaluated as "OR" expressions. however, the include/exclude tabs are separate so mixing and matching is a possibility, assuming you have the right content coming through.

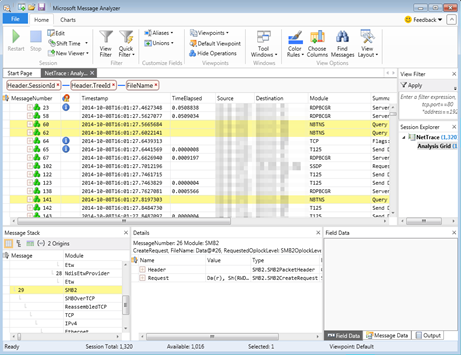

in the opalis client user guide, the trigger policy object section has a table that states this description for the child policy status: the status that was returned by the child policy. it's important to clarify that the default behavior of a link operator coming from the trigger policy object is to set the filter to look for anything coming from trigger policy itself to "success".

if you're looking for the status coming from the child policy, you should change the link operator filter to look for something like this:

Comments

Post a Comment